Research

Computer Vision group at UBC focuses on developing and building state-of-the-art algorithms and prototypes for visually intelligent systems. We tackle a broad spectrum of problems, from low-level vision to high-level visual semantic understanding. Applications are equally broad, spanning robotics, sport analytics, health-care, visual media curation and annotation.

Sport Analytics

Sports video analysis offers an opportunity to address a wide range of vision tasks, from tracking to re-identification to action recognition to understanding cooperative and competitive actions of groups of team members. By tackling this wide range of problem in the constrained context of sports (hockey, soccer, football) we can make progress on the sub-problems (such as pose and action recognition) that are valuable in more general settings such as smart homes.

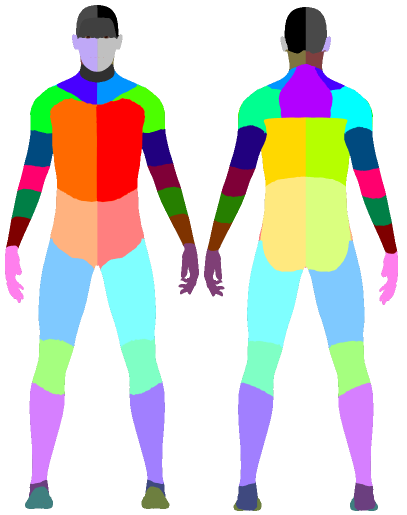

Human Pose Estimation and Activity Recognition

Ability to recover human pose and understand human behavior is a very difficult problem that dates back to the beginnings of Computer Vision. AI-driven automated systems will eventually need to interact with humans and require an ability to reason about and interpret our subtle behaviors. Such algorithms are also critical for variety of important applications, including in gaming, security, analytics, virtual retail and many others. The topics we are working on in this space are: person detection and tracking, human pose estimation, activity recognition, situation recognition, even recognition.

Vision and Language

As humans, our main modality of perception is vision, and for communication — language. These two modalities are closely tied and enable our ability to navigate and engage in complex tasks. With the progress of AI, which has lead to substantial advances in both visual and natural language processing, we are looking to move from learning from a single modality like vision, language or sound, to building integrated autonomous algorithms and systems that can interact and learn from humans through language. The topics we are working on in this space are: image and video captioning, visual question answering and dialog systems, visual language grounding, alignment of text and video, natural language-based image/video search and retrieval, and zero-shot learning.

Visual Imagination (Generative) Models

Humans have amazing ability to be able to hypothesize and create visual depictions of described scenes or objects from novel views. This imagination or control hallucination is, at least in part, responsible for transference and our ability to efficiently learn concepts for few instantiations. With the progress of deep generative models, such as Variational Auto Encoders and Generative Adversarial Networks, we are starting to strive towards similar successes from automated visual systems. The topics we are working on in this space are: disentangled representations, image-to-image translation, generating realistic visual depictions from linguistic or layout description, video generation.